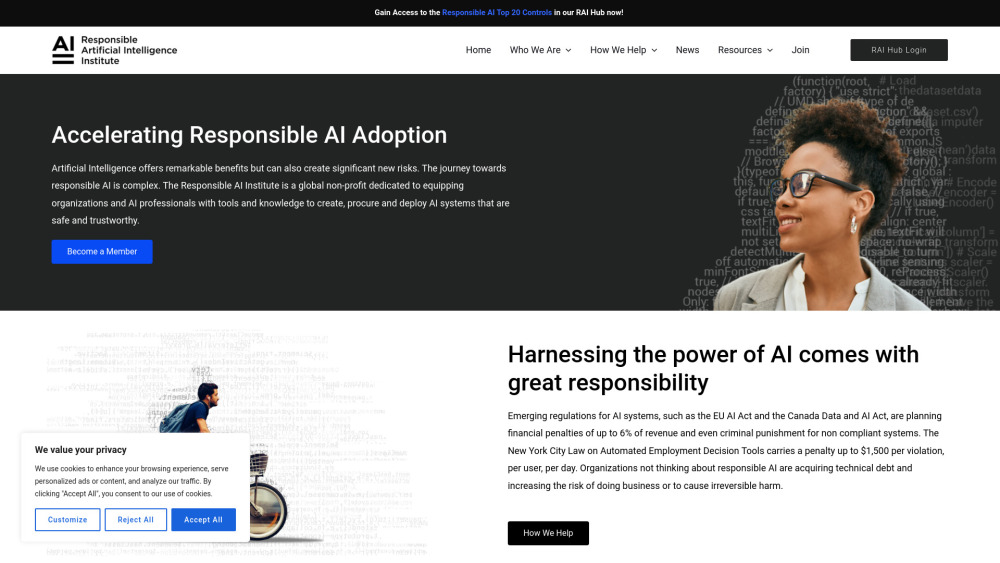

Responsible AI Institute

The Responsible AI Institute (RAI Institute) is a global, member-driven non-profit organization founded in 2016, dedicated to equipping organizations and AI professionals with the tools and knowledge necessary to develop, procure, and deploy AI systems that are safe, trustworthy, and compliant with emerging regulations. Recognizing the dual-edged nature of AI, the institute convenes critical conversations across industry, government, academia, and civil society to guide the responsible development of AI. It offers a comprehensive suite of services including independent conformity assessments, a responsible AI framework, and a member-driven community that facilitates collaboration and knowledge sharing.

The institute provides templates, guides, and best practices to help organizations establish responsible AI policies and governance structures. It also offers a certification program and resources to benchmark and improve responsible AI practices. By leveraging these tools, organizations can ensure their AI systems comply with regulations, evaluate trustworthy AI vendors, establish internal governance policies, and manage AI-related risks effectively.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.