Sutra

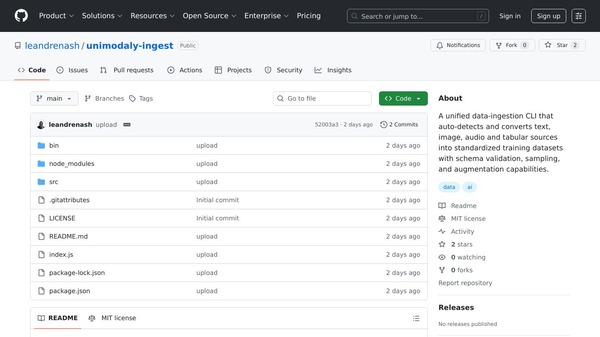

Sutra is a comprehensive framework designed to simplify the development of large language model (LLM) applications. It offers a robust set of tools and libraries that help developers create, deploy, and manage LLM-based applications efficiently. With its modular architecture, Sutra allows for easy integration of various components such as data preprocessing, model training, and inference into a seamless workflow. The framework supports multiple programming languages and is compatible with popular machine learning frameworks, making it a versatile choice for developers. Additionally, Sutra includes built-in support for distributed computing, enabling efficient scaling of LLM applications across multiple nodes. It also provides a range of pre-trained models and datasets, allowing developers to quickly prototype and deploy their applications. Sutra's extensive documentation and community support further enhance its usability and accessibility. As an open-source project, Sutra's source code is available on GitHub, encouraging community contributions and collaboration.

Benefits

Sutra offers several key advantages for developers working with large language models. Its modular architecture allows for easy integration of various components, streamlining the development process. The framework's support for multiple programming languages and compatibility with popular machine learning frameworks make it a versatile choice. Built-in support for distributed computing enables efficient scaling of applications. Additionally, Sutra provides pre-trained models and datasets, allowing developers to quickly prototype and deploy their applications. The extensive documentation and community support further enhance its usability and accessibility.

Use Cases

Sutra can be used in various scenarios where large language models are applied. It is particularly useful for developers looking to create, deploy, and manage LLM-based applications. The framework's modular architecture and support for multiple programming languages make it suitable for a wide range of applications, from natural language processing to data analysis. Sutra's built-in support for distributed computing allows for efficient scaling of applications, making it ideal for large-scale projects. The availability of pre-trained models and datasets enables quick prototyping and deployment, making Sutra a valuable tool for both research and commercial applications.

Additional Information

Sutra is an open-source project, and its source code is available on GitHub. This encourages community contributions and collaboration, fostering a vibrant ecosystem around the framework. The extensive documentation and community support further enhance its usability and accessibility, making it a popular choice among developers.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.