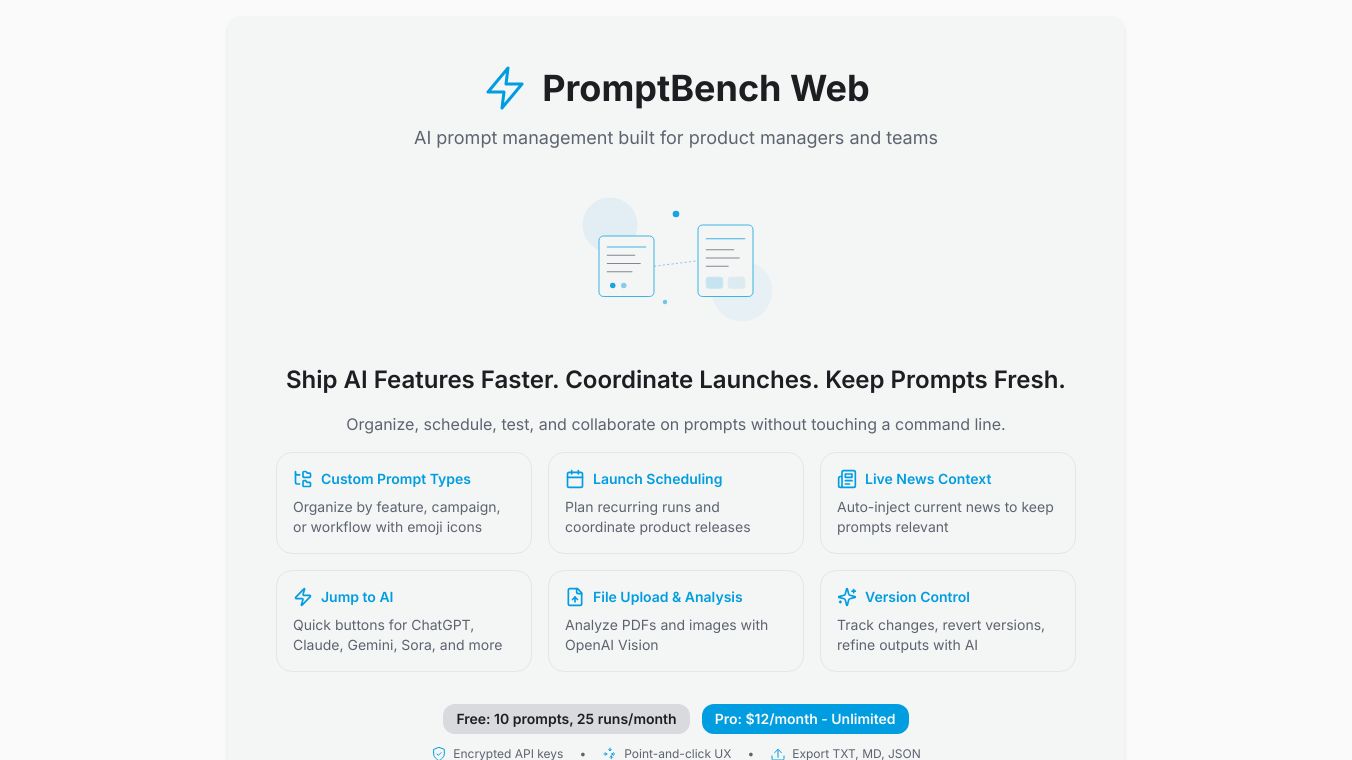

PromptBench

PromptBench is a powerful tool designed to help researchers and developers evaluate the performance of large language models (LLMs). It provides a standardized way to test how well these models handle different tasks, making it easier to compare them fairly. PromptBench covers a wide range of language skills, including reasoning, knowledge, bias, and toxicity. This helps identify what each model does well and where it could improve. As an open-source project, PromptBench encourages community contributions, allowing it to grow and improve over time. It is flexible and can be used for both small and large evaluations, making it a valuable resource for anyone working with AI. PromptBench is continuously updated with new benchmarks and tasks, ensuring it stays relevant and useful for the AI community.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.