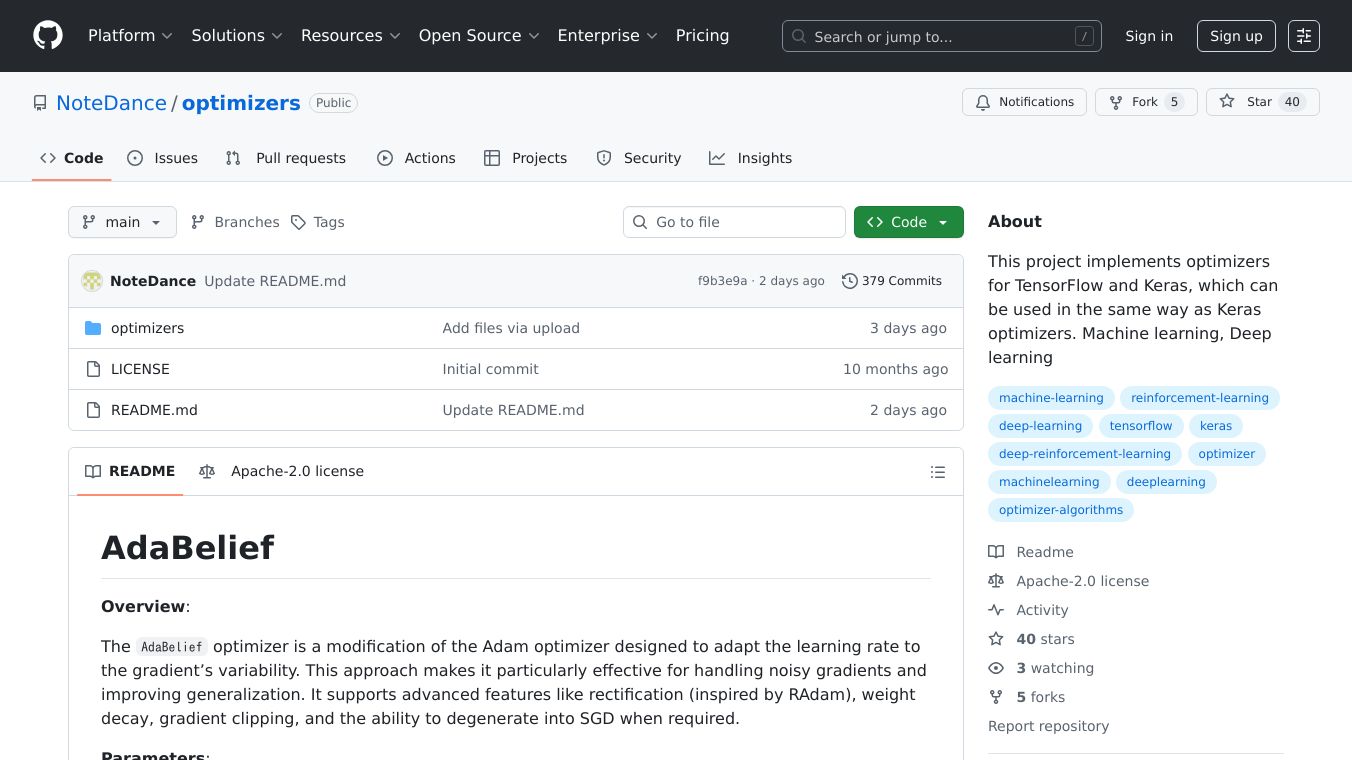

optimizers

What is optimizers?

optimizers is a project that implements various optimizers for TensorFlow and Keras. These optimizers can be used in the same way as Keras optimizers, making them easy to integrate into existing machine learning workflows. The project includes several advanced optimizers designed to improve the performance and efficiency of deep learning models.

Benefits

The optimizers in this project offer several key benefits:

- Improved Performance: Many of the optimizers are designed to handle noisy gradients and improve generalization, leading to better model performance.

- Flexibility: The optimizers support a wide range of features, such as weight decay, gradient clipping, and momentum, allowing users to tailor the optimization process to their specific needs.

- Ease of Use: Since these optimizers are compatible with Keras, they can be easily integrated into existing TensorFlow and Keras workflows without requiring significant changes to the code.

Use Cases

The optimizers in this project can be used in various machine learning and deep learning tasks, including:

- Training Deep Neural Networks: The optimizers are particularly effective for training deep neural networks, where they can help improve convergence and generalization.

- Handling Noisy Gradients: Some optimizers, like AdaBelief, are designed to handle noisy gradients, making them suitable for tasks where the data is noisy or the model is complex.

- Large-Batch Training: Optimizers like Lars are designed for large-batch training, making them ideal for training models on large datasets.

Pricing

The pricing information for the optimizers project is not available in the provided article. For the most accurate and up-to-date pricing details, it is recommended to visit the project's official website or contact the project maintainers.

Vibes

The public reception and reviews for the optimizers project are not mentioned in the provided article. For insights into user experiences and feedback, it is advisable to check online forums, user reviews, or community discussions related to the project.

Additional Information

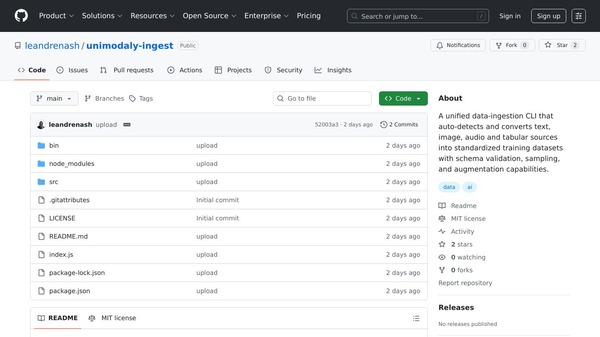

The optimizers project is open-source and available on GitHub. It is maintained by the NoteDance organization, and the repository includes detailed documentation and example usage for each optimizer. The project is licensed under an open-source license, allowing users to freely use, modify, and distribute the code.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.