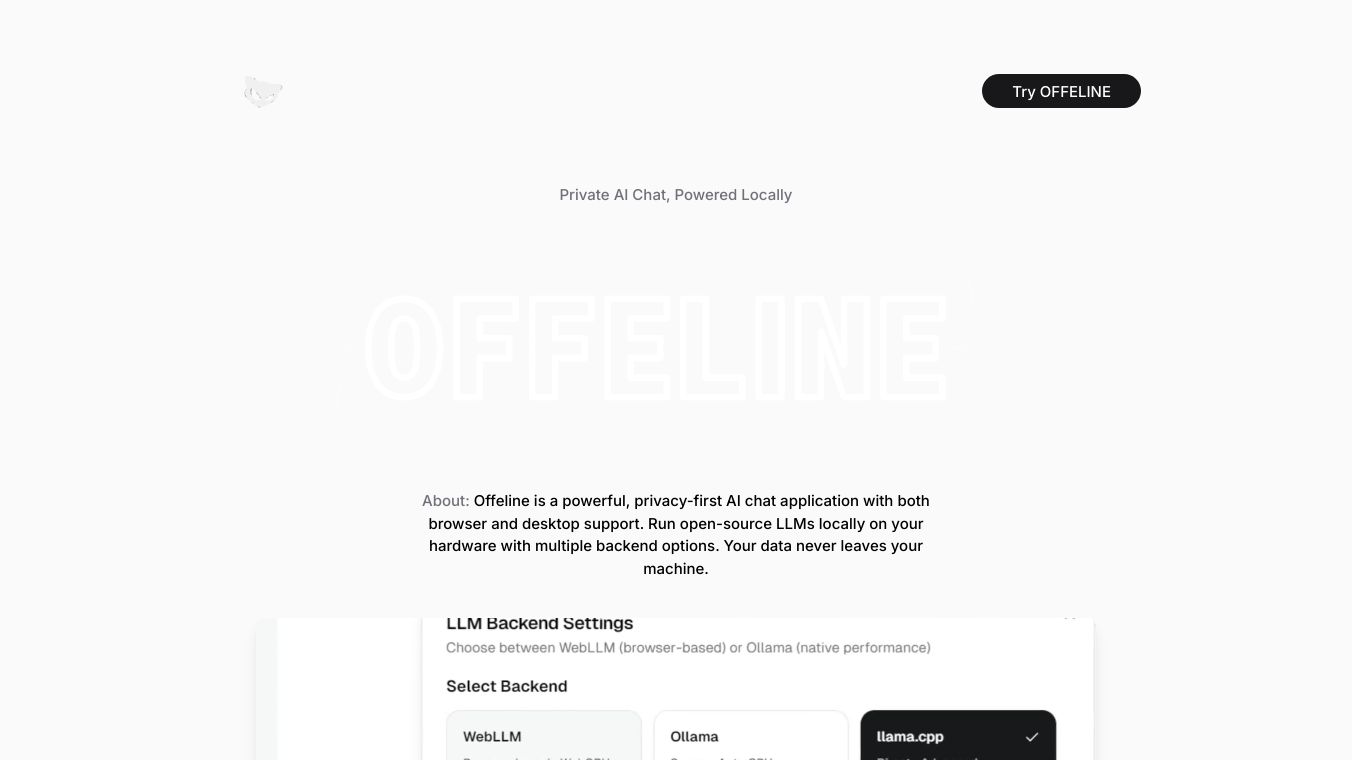

Offeline

What is Offeline?

Offeline is a privacy-focused AI chat application that lets you use powerful AI models right on your own devices. Whether you're on a computer or a browser, Offeline ensures your data stays private by running everything locally. This means no information is sent to external servers, giving you complete control over your data.

Benefits

Offeline offers several key advantages:

- Complete Privacy: All AI models run on your hardware, ensuring your data never leaves your machine.

- Multi-Platform Support: Access Offeline via a web browser or a native desktop application, providing a seamless experience across all your devices.

- Offline Capability: Download AI models once and use them offline indefinitely. WebGPU mode enables true offline operation.

- Web Search Integration: Enhance your AI's knowledge with real-time web search capabilities using Tavily or DuckDuckGo (when enabled).

- File Embeddings: Load and ask questions about various document types (PDF, MD, DOCX, TXT, CSV, RTF) with fully local document processing and analysis.

- Voice Support: Interact with the AI using voice messages, allowing for natural and hands-free communication.

- Regenerate Responses: Quickly regenerate AI responses without retyping prompts, making it easy to refine and iterate on your conversations.

- Chat History: Maintain persistent, organized conversation history across sessions, ensuring you never lose important chats.

- Custom Memory: Personalize AI behavior with custom system prompts and memory, making the AI truly yours.

Use Cases

Offeline is versatile and can be used in various scenarios:

- Personal Assistant: Use Offeline as a private AI assistant to manage tasks, set reminders, and get information without worrying about data privacy.

- Document Analysis: Load and analyze documents locally, making it ideal for researchers, students, and professionals who need to process sensitive information.

- Educational Tool: Enhance learning by asking questions about documents and getting up-to-date answers with web search integration.

- Creative Writing: Use voice support and custom memory to brainstorm ideas, write, and refine creative projects.

Supported Backends

Offeline supports multiple backends to cater to different user needs:

- WebGPU: Run models directly in your browser using GPU acceleration. This option requires no installation and offers native browser-based inference.

- Ollama: Manage and run models easily with the Ollama backend, which supports Windows, Mac, and Linux. This backend is known for its easy management and compatibility.

- llama.cpp: Optimized for performance, this backend offers CPU/GPU optimized inference on desktop with direct integration.

Additional Information

Offeline is open-source, making it accessible for developers to contribute and customize. This openness ensures continuous improvement and community support, making Offeline a reliable and evolving tool for AI enthusiasts and professionals alike.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.