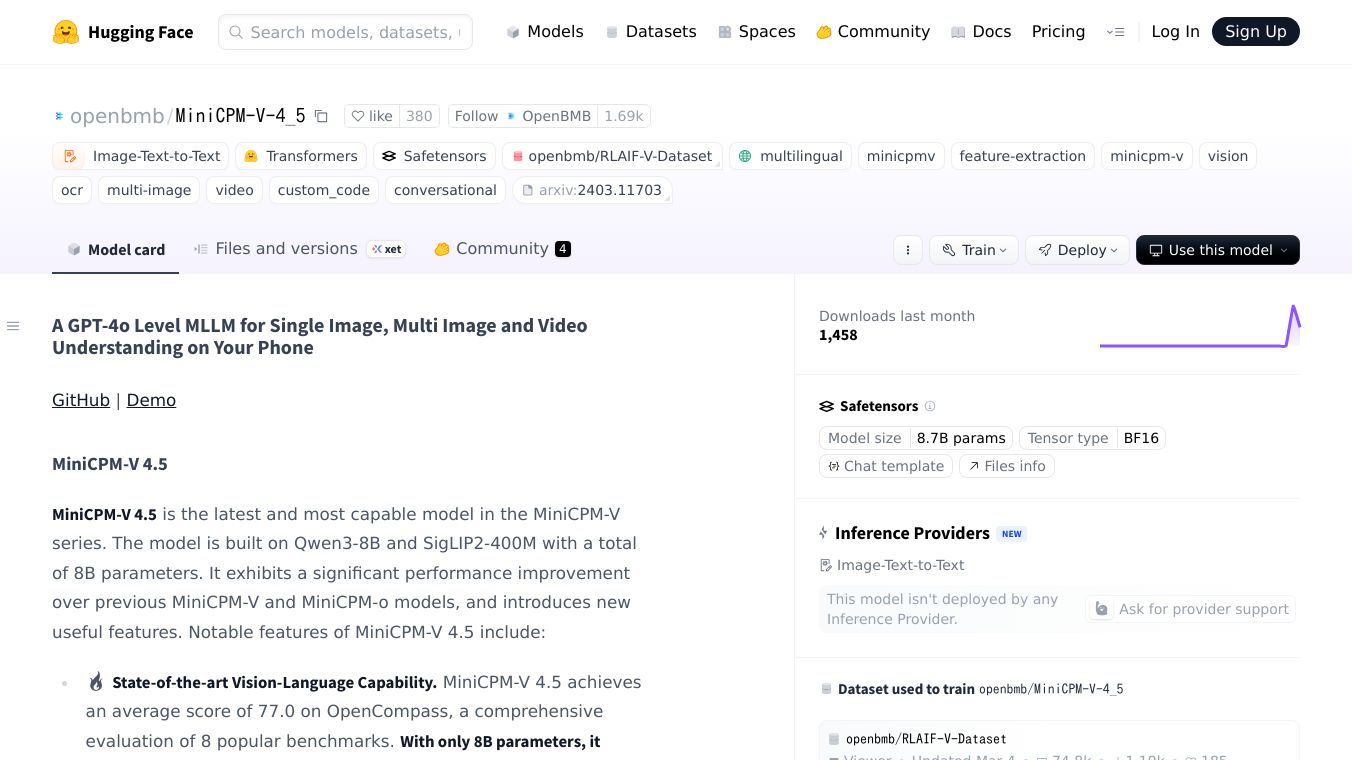

MiniCPM-V 4.5

MiniCPM-V 4.5: A Powerful AI Model for Image and Video Understanding

MiniCPM-V 4.5 is the latest and most capable model in the MiniCPM-V series. It is built on Qwen3-8B and SigLIP2-400M with a total of 8 billion parameters. This model offers significant performance improvements over previous versions and introduces new useful features.

Benefits

- State-of-the-art Vision-Language Capability: MiniCPM-V 4.5 achieves an average score of 77.0 on OpenCompass, a comprehensive evaluation of 8 popular benchmarks. With only 8 billion parameters, it surpasses widely used proprietary models like GPT-4o-latest, Gemini-2.0 Pro, and strong open-source models like Qwen2.5-VL 72B for vision-language capabilities, making it the most performant MLLM under 30 billion parameters.

- Efficient High Refresh Rate and Long Video Understanding: Powered by a new unified 3D-Resampler over images and videos, MiniCPM-V 4.5 can achieve a 96x compression rate for video tokens. This means it can perceive significantly more video frames without increasing the LLM inference cost, bringing state-of-the-art high refresh rate (up to 10FPS) video understanding and long video understanding capabilities on various benchmarks.

- Controllable Hybrid Fast/Deep Thinking: MiniCPM-V 4.5 supports both fast thinking for efficient frequent usage with competitive performance, and deep thinking for more complex problem-solving. This fast/deep thinking mode can be switched in a highly controlled fashion to cover efficiency and performance trade-offs in different user scenarios.

- Strong OCR, Document Parsing, and Multilingual Capabilities: Based on its architecture, MiniCPM-V 4.5 can process high-resolution images with any aspect ratio and up to 1.8 million pixels. It achieves leading performance on OCRBench, surpassing proprietary models such as GPT-4o-latest and Gemini 2.5. It also excels in PDF document parsing capability on OmniDocBench among general MLLMs. The model features trustworthy behaviors, outperforming GPT-4o-latest on MMHal-Bench, and supports multilingual capabilities in more than 30 languages.

- Easy Usage: MiniCPM-V 4.5 can be easily used in various ways, including support for efficient CPU inference on local devices, format quantized models in 16 sizes, high-throughput and memory-efficient inference, fine-tuning on new domains and tasks, quick deployment, optimization on iPhone and iPad, and an online web demo.

Use Cases

MiniCPM-V 4.5 can be used in a variety of applications, including:*Image and Video Analysis: Analyzing images and videos for various purposes, such as object recognition, scene understanding, and content moderation.*Document Processing: Extracting information from documents, including text, tables, and other structured data.*Multilingual Applications: Supporting applications that require multilingual capabilities, such as translation, language learning, and cross-lingual information retrieval.*Efficient Inference: Running on local devices with efficient CPU inference, making it suitable for edge computing and mobile applications.

Pricing

The model and weights of MiniCPM-V 4.5 are completely free for academic research. After filling out a registration form, the weights are also available for free commercial use.

Vibes

MiniCPM-V 4.5 has been deployed on iPad M4 with impressive results. The demo video showcases the model's capabilities in real-world scenarios, demonstrating its ability to understand and analyze images and videos effectively.

Additional Information

MiniCPM-V 4.5 is part of a series of multimodal projects developed by a team of researchers. The model's performance and capabilities have been validated through comprehensive evaluations and benchmarks. The code in the repository is released under the License, and the usage of MiniCPM-V series model weights must strictly follow the provided guidelines.

For more information, you can explore the key techniques of MiniCPM-V 4.5 and other multimodal projects of the team. If you find the work helpful, please consider citing the papers and liking the project.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.