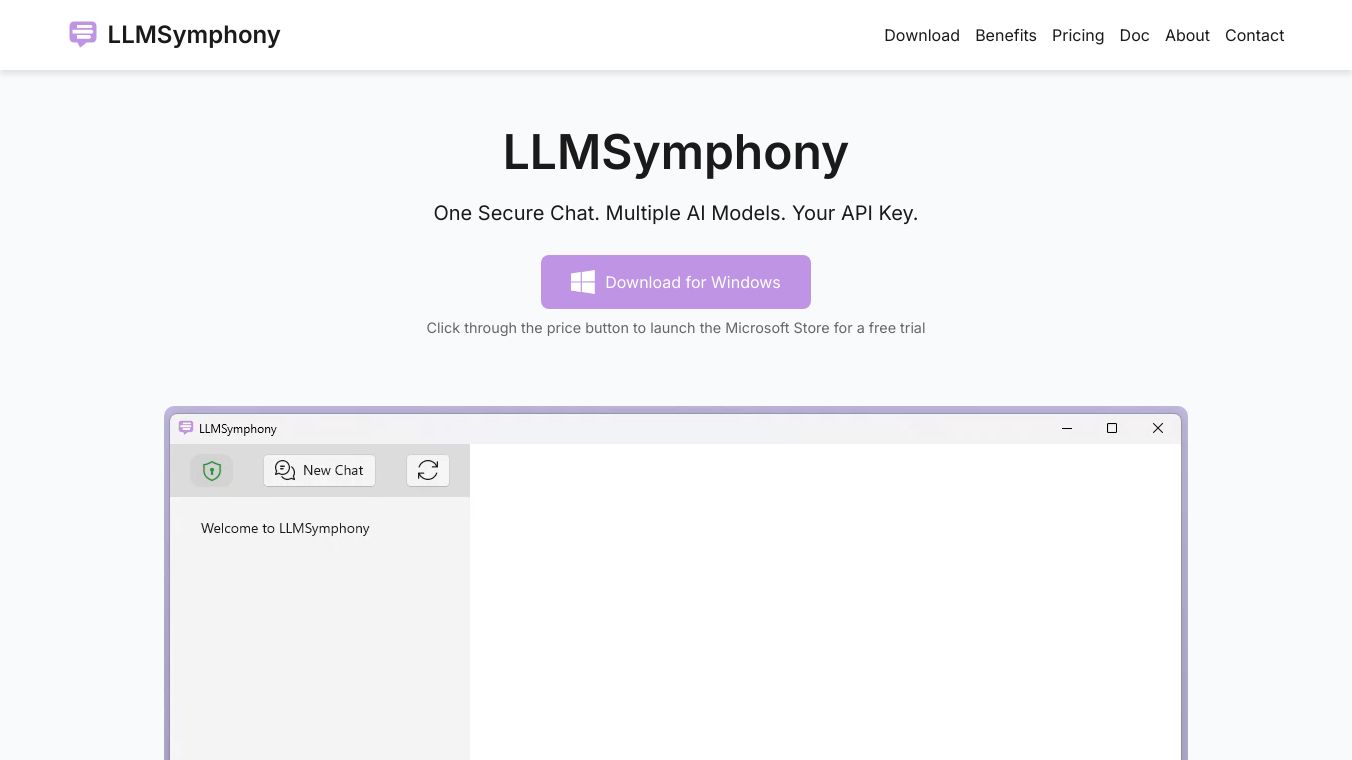

LLMSymphony

LLMSymphony is a clever system that helps large language models (LLMs) work even better. LLMs help many tools we use daily, like chatbots and AI code makers. But they sometimes have trouble managing memory, especially in long and complex talks. This can make responses slow and limit how many people can use the system at once.

Benefits

LLMSymphony fixes this issue by handling memory more smartly. It uses early hints, like when a user starts typing, to guess when a new request is coming. This way, it gets the memory ready for a quick reply. This clever management leads to faster responses, better use of resources, and happier users. It also lowers running costs and lets the system handle more users at the same time.

Use Cases

LLMSymphony can help in many AI tasks. For instance, a customer service site using LLMSymphony can handle more questions at once while keeping replies quick. This makes customers happier and cuts down wait times. Also, LLMs can automate and boost various jobs, like making reports, answering customer questions all day, or helping workers with research and analysis. This makes work more efficient and lowers running costs.

Vibes

In tests using real chatbot talks, LLMSymphony handled up to 8 times more users than other systems while staying quick. This shows it works well in making LLM apps better.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.