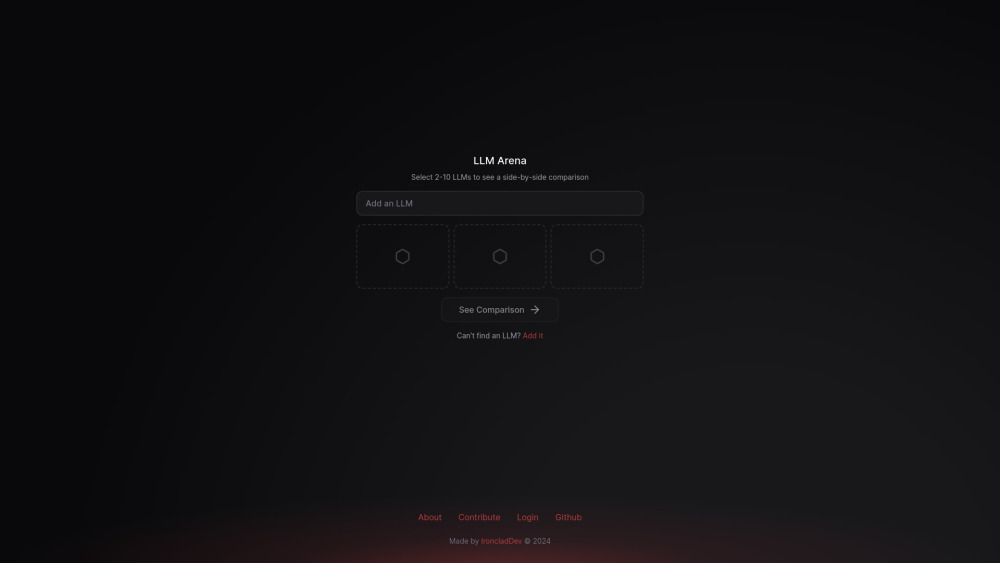

LLM Arena

LLM Arena is a user-friendly tool designed to facilitate the evaluation and comparison of different large language models. It provides a level playing field where various LLMs can compete and showcase their capabilities. Originally conceived by Amjad Masad, CEO of Replit, LLM Arena was developed over six months to create an accessible platform for comparing LLMs side-by-side. The platform is open to the community, allowing users to contribute new models and participate in evaluations.

Highlights:

- Open-source platform for comparing LLMs

- Crowdsourced evaluation system

- Elo rating system to rank models

- Community contribution model

Key Features:

- Side-by-side LLM comparison

- Crowdsourced evaluation

- Elo rating system

- Open contribution model

Benefits:

- Standardized platform for LLM evaluation

- Community participation and contribution

- Real-world testing scenarios

- Transparent model comparison

Use Cases:

- AI research benchmarking

- LLM selection for applications

- Educational tool

- Product comparison

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.