LLM API

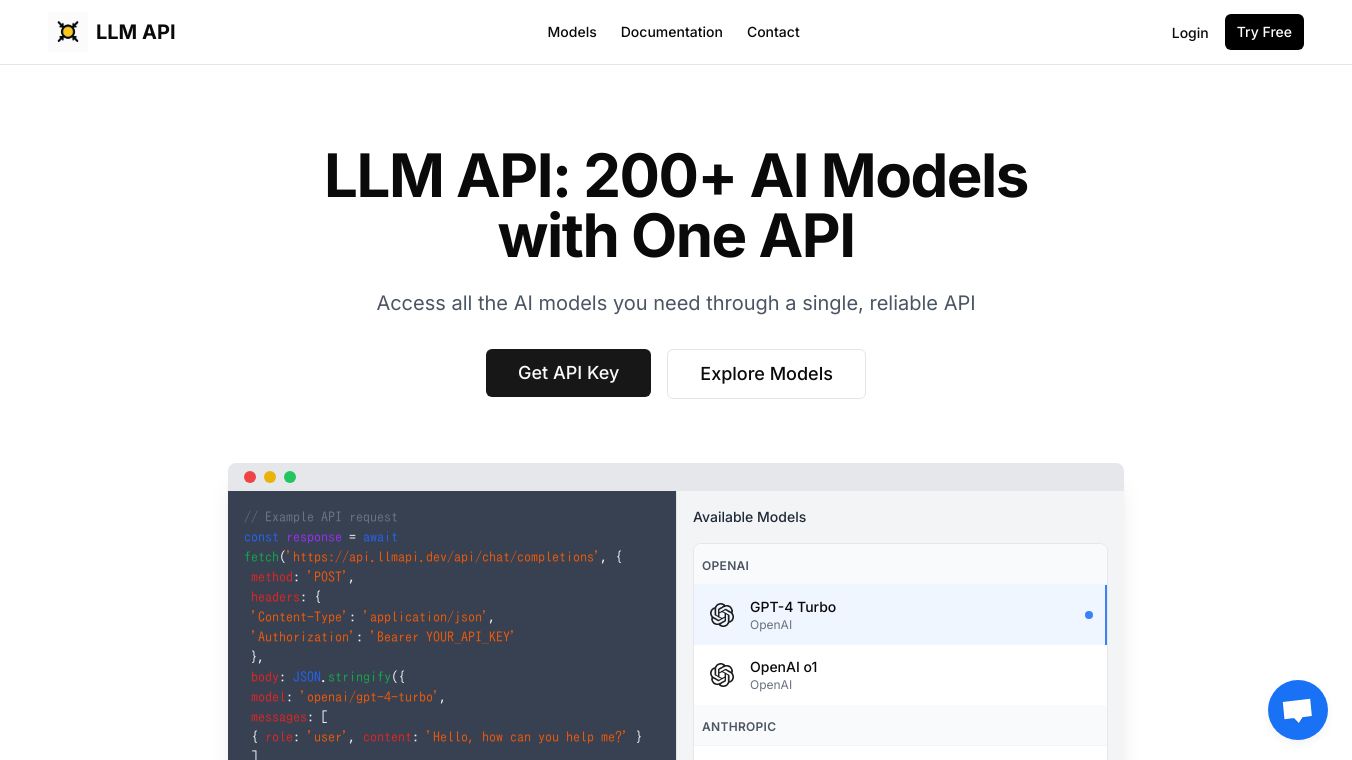

What is LLM API?

LLM API is a powerful platform designed to simplify the integration and management of multiple Large Language Models (LLMs) like GPT-4, Claude, and Mistral. It offers a unified API that allows developers to access over 200 AI models from leading providers such as OpenAI, Anthropic, Google, and Meta. This platform is fully compatible with the OpenAI SDK, making it easy to switch providers without changing your code. With features like chat completions, text embeddings, speech recognition, and text-to-speech generation, LLM API is a versatile tool for developers and enterprises looking to leverage advanced AI capabilities.

Benefits

LLM API offers several key advantages:

- Unified API: Access multiple LLMs through a single, consistent API endpoint, reducing integration complexity.

- Centralized Billing and Management: Consolidate all LLM usage under one account, simplifying cost tracking and management.

- Consistent Response Formats: Enjoy uniform data structures across different models, making it easier to maintain clean and predictable code.

- Flexible Usage Plans: Scale your AI projects seamlessly with adaptable plans that support both experimentation and full-scale production deployments.

- Comprehensive Documentation: Benefit from clear, well-organized documentation and straightforward onboarding processes.

- Experimentation and Optimization: Easily compare different LLMs to find the best fit for your specific use cases.

Use Cases

LLM API is ideal for a variety of applications, including:

- Prototyping and Experimentation: Rapidly test and iterate with different LLMs to find the most suitable model for your needs.

- Production Deployments: Build and deploy AI-powered applications with confidence, knowing that your integrations are streamlined and scalable.

- Cost Management: Simplify billing and cost tracking across multiple AI models, making it easier to manage your budget.

- Developer Efficiency: Focus on building innovative products rather than managing complex integrations and APIs.

Pricing

LLM API offers a pay-as-you-go pricing model, allowing users to scale their usage based on their needs. This flexible approach ensures that you only pay for what you use, making it a cost-effective solution for both small and large-scale projects.

Vibes

While specific user reviews are not provided in the article, the platform's features and benefits suggest a positive reception from developers and enterprises looking to simplify their AI integrations. The emphasis on efficiency, scalability, and ease of use positions LLM API as a valuable tool in the rapidly evolving AI landscape.

Additional Information

LLM API is designed to support a wide range of AI functionalities, including chat completions, text embeddings, speech recognition, and text-to-speech generation. The platform guarantees high reliability with 99% uptime and offers round-the-clock customer support. Users can explore detailed model parameters and pricing directly from the API, making it a comprehensive solution for accessing cutting-edge AI technology.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.