Keys and Caches

Keys and Caches: A Unified View of Your Machine Learning Stack

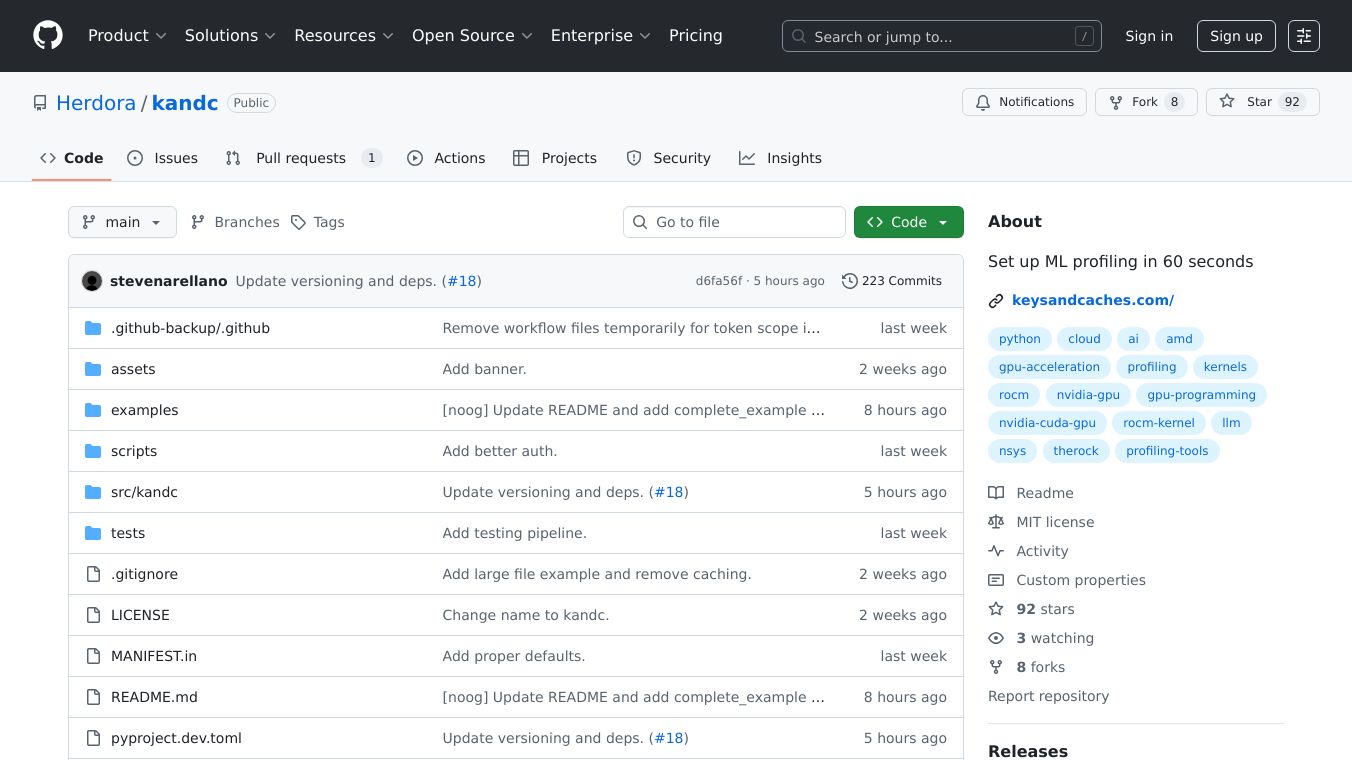

Keys and Caches is an open-source Python library designed to provide a comprehensive view of your entire machine learning stack, from PyTorch down to the GPU. It offers experiment tracking and workflow management, making it easier to monitor and optimize your machine learning projects.

Benefits

Keys and Caches offers several key benefits for machine learning practitioners:

- Experiment Tracking: Automatically log metrics and hyperparameters to keep track of your experiments.

- Cloud Dashboard: Visualize your experiments in real-time with an easy-to-use cloud dashboard.

- Project Organization: Group related experiments together to keep your projects organized.

- Zero-Overhead When Disabled: Tracking only activates when initialized, ensuring minimal impact on performance when not in use.

Use Cases

Keys and Caches is particularly useful for:

- Researchers and Data Scientists: Track and visualize experiments to gain insights and improve model performance.

- Machine Learning Engineers: Manage workflows and monitor training processes efficiently.

- Teams: Collaborate on projects by sharing experiment data and visualizations.

Installation

To get started with Keys and Caches, simply install it using pip:

pip install kandcQuick Start

Here is a quick example of how to use Keys and Caches in your machine learning project:

import kandcimport torchimport torch.nn as nnclass SimpleNet(nn.Module):def __init__(self):super().__init__()self.layers = nn.Sequential(nn.Linear(784, 128),nn.ReLU(),nn.Linear(128, 10),)def forward(self, x):return self.layers(x)def main():# Initialize experiment trackingkandc.init(project="my-project",name="experiment-1",config={"batch_size": 32, "learning_rate": 0.01})# Your training/inference codemodel = SimpleNet()data = torch.randn(32, 784)output = model(data)loss = output.mean()# Log metricskandc.log({"loss": loss.item(), "accuracy": 0.85})# Finish the runkandc.finish()if __name__ == "__main__":main()Key Features

Simple Initialization

Initialize a new run with your project configuration:

kandc.init(project="my-ml-project",name="experiment-1",config={"learning_rate": 0.001,"batch_size": 32,"model": "resnet18",})Metrics Logging

Log metrics during your training process:

# Log single or multiple metricskandc.log({"loss": 0.25, "accuracy": 0.92})# Log with step numbers for training loopsfor epoch in range(100):loss = train_epoch()kandc.log({"epoch_loss": loss}, step=epoch)Multiple Modes

Choose the mode that best fits your needs:

# Online mode (default) - full cloud experiencekandc.init(project="my-project")# Offline mode - local developmentkandc.init(project="my-project", mode="offline")# Disabled mode - zero overheadkandc.init(project="my-project", mode="disabled")Inference Tracking

Track inference performance and results:

import kandcimport torchimport torch.nn as nnclass SimpleNet(nn.Module):def __init__(self):super().__init__()self.layers = nn.Sequential(nn.Linear(784, 128),nn.ReLU(),nn.Linear(128, 10),)def forward(self, x):return self.layers(x)def run_inference():# Initialize inference trackingkandc.init(project="inference-demo",name="simple-inference",config={"batch_size": 32})# Create model and wrap with profilermodel = SimpleNet()model = kandc.capture_model_instance(model, model_name="SimpleNet")model.eval()# Run inferencedata = torch.randn(32, 784)with torch.no_grad():predictions = model(data)confidence = torch.softmax(predictions, dim=1).max(dim=1)[0].mean()# Log resultskandc.log({"avg_confidence": confidence.item(),"batch_size": 32})kandc.finish()if __name__ == "__main__":run_inference()Examples

For more detailed examples, check out theexamples/directory in the Keys and Caches repository. These examples cover various use cases, including:

complete_example.py: A simple getting started example.offline_example.py: Usage in offline mode.profiler_example.py: Performance profiling with model tracing.timed_block.py: Using timing decorators and context managers.vllm_example.py: Integration with VLLM for LLM inference tracking.

API Reference

Core Functions

kandc.init(): Initialize a new run with configuration.kandc.finish(): Finish the current run and save all data.kandc.log(): Log metrics to the current run.kandc.get_current_run(): Get the active run object.kandc.is_initialized(): Check if kandc is initialized.

Run Modes

"online": Default mode, full cloud functionality."offline": Save everything locally, no server sync."disabled": No-op mode, zero overhead.

Additional Information

Keys and Caches is designed to set up ML profiling in just 60 seconds. It is an open-source project, and you can find more information and contribute to its development on theGitHub repository.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.