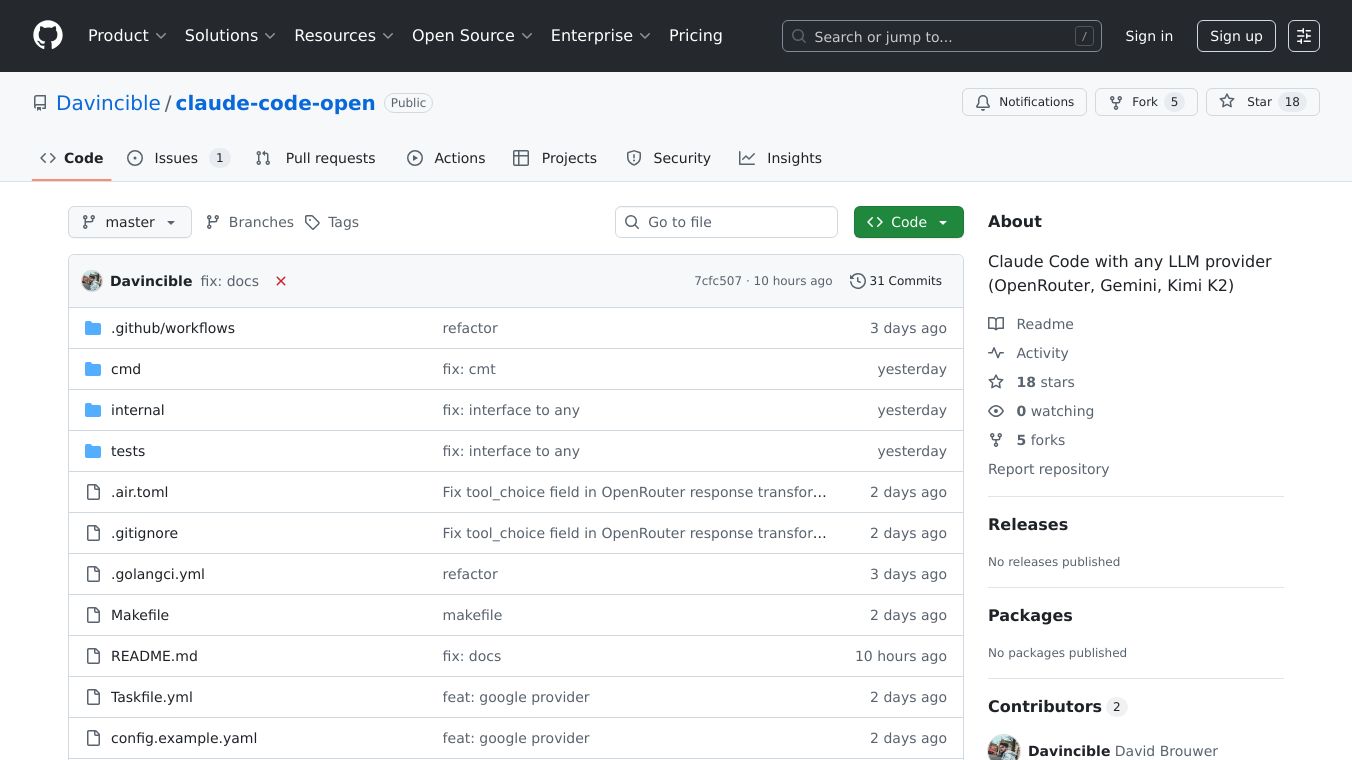

Claude Code Open

What is Claude Code Open?

Claude Code Open is a universal LLM proxy that connects Claude Code to any language model provider. It is designed to be a production-ready server that converts requests from various LLM providers to Anthropic's Claude API format. Built with Go for high performance and reliability, Claude Code Open supports multiple providers including OpenRouter, OpenAI, Anthropic, NVIDIA, and Google Gemini.

Benefits

Claude Code Open offers several key advantages:

- Universal Compatibility: Connects Claude Code to a wide range of language model providers, making it versatile for different use cases.

- High Performance: Built with Go, ensuring high performance and reliability.

- Easy Setup: Requires only the

CCO_API_KEYenvironment variable to get started, making it user-friendly. - Advanced Configuration: Supports YAML configuration with automatic defaults, model whitelisting, dynamic model selection, and API key protection.

- Dynamic Request Transformation: Automatically detects and transforms requests, ensuring seamless integration with different providers.

- Streaming Support: Supports streaming for all providers, enhancing real-time interactions.

- Modular Architecture: The provider system is modular, allowing for easy integration of new providers.

- Comprehensive Monitoring: Includes health checks, logs, and metrics for monitoring and troubleshooting.

Use Cases

Claude Code Open can be used in various scenarios:

- Developers: Easily integrate Claude Code with different language model providers for their applications.

- Enterprises: Deploy a reliable and high-performance LLM proxy server for internal use.

- Researchers: Experiment with different language models and providers without changing the core integration code.

- Cloud Services: Use the proxy to manage and route requests to different providers based on specific needs.

Additional Information

Claude Code Open is licensed under the MIT License, ensuring open-source accessibility and community contributions. The project includes a comprehensive changelog detailing new features and improvements. The latest release includes support for Nvidia and Google Gemini, YAML configuration, model whitelisting, API key protection, enhanced CLI, comprehensive testing, default model management, and streaming tool calls.

For production deployment, a systemd service can be created on Linux. The project also includes both a traditionalMakefileand a modernTaskfile.ymlfor task automation, making it easy to manage and deploy.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.