BenchLLM

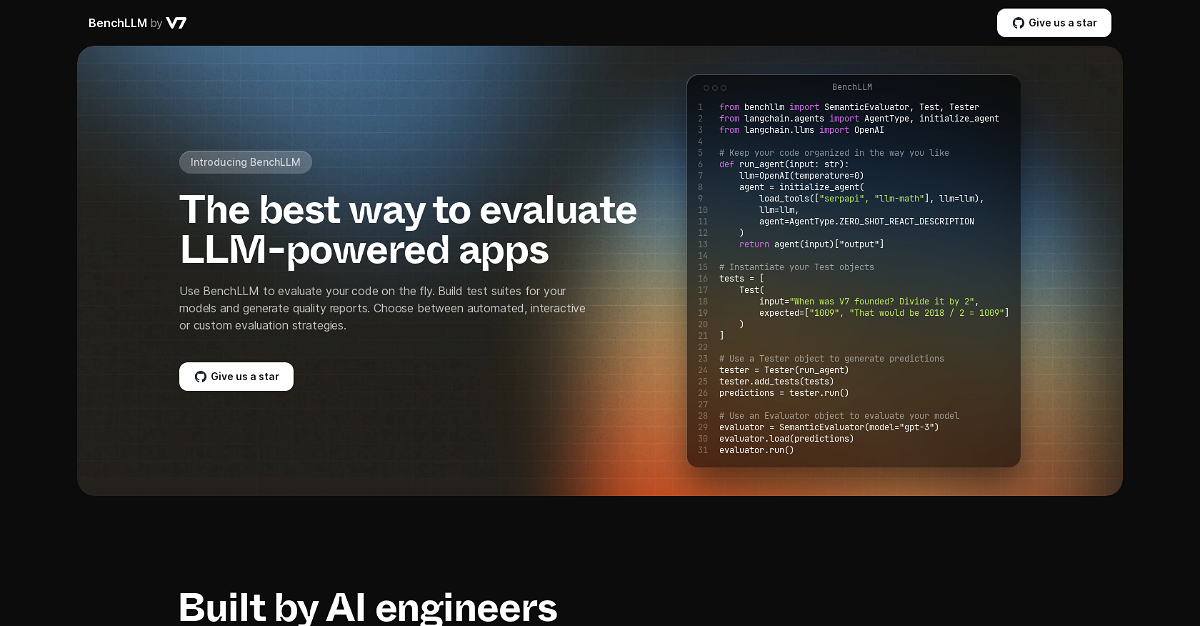

Benchllm is a comprehensive and user-friendly tool for evaluating large language models (LLMs) and AI applications. It simplifies the evaluation process, allowing you to run and assess models effortlessly with simple command-line commands.

Highlights:

- Effortless Evaluation: Define intuitive tests in JSON or YAML format, organize them into suites, and automate evaluations for seamless testing.

- Flexible & Powerful: Supports popular APIs like OpenAI and LangChain, enabling you to integrate with various AI tools and services.

- Insightful Reporting: Generate detailed reports and visualizations to monitor model performance and identify potential regressions.

Key Features:

- CLI Integration: Streamline your workflow by running and evaluating models directly from the command line.

- Customizable Testing: Design your evaluation strategies using automated, interactive, or custom approaches to suit your specific needs.

- CI/CD Integration: Automate evaluations within your continuous integration and continuous delivery pipelines for efficient testing.

This content is either user submitted or generated using AI technology (including, but not limited to, Google Gemini API, Llama, Grok, and Mistral), based on automated research and analysis of public data sources from search engines like DuckDuckGo, Google Search, and SearXNG, and directly from the tool's own website and with minimal to no human editing/review. THEJO AI is not affiliated with or endorsed by the AI tools or services mentioned. This is provided for informational and reference purposes only, is not an endorsement or official advice, and may contain inaccuracies or biases. Please verify details with original sources.

Comments

Please log in to post a comment.